Restricted Boltzmann Machines (RBM)

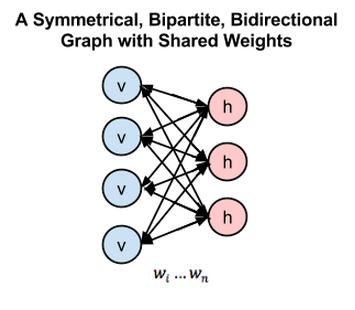

An RBM is a type of stochastic artificial neural network with two layers: a visible layer representing input data and a hidden layer capturing latent features. Unlike Boltzmann Machines, RBMs have no connections within layers of the same type, simplifying the architecture. Training involves adjusting weights to maximize the likelihood of reproducing the input data. RBMs are used in unsupervised learning tasks such as feature learning, collaborative filtering, and as building blocks for pretraining deep neural networks. They operate based on probabilistic principles and have proven effective in capturing complex patterns and representations in data.

source: https://wiki.pathmind.com/restricted-boltzmann-machine

What happens in an RBM:

- Forward Pass (Positive Phase): The input data is presented to the visible layer, and activations are passed to the hidden layer. This phase is sometimes referred to as the positive phase because it corresponds to the data's actual presence.

- Backward Pass (Negative Phase or Reconstruction): The activations from the hidden layer are then used to reconstruct the input in the visible layer. This phase is often termed the negative phase or reconstruction phase because it involves generating data that aims to resemble the input. The reconstructed data is compared with the actual input during training.

The use of both forward and backward passes distinguishes RBMs from conventional feedforward neural networks, where information typically flows only in the forward direction during training. This bidirectional processing in RBMs is integral to their learning algorithm, such as the contrastive divergence method, which involves adjusting weights to reduce the difference between the positive and negative phases.